"Any sufficiently advanced technology is indistinguishable from magic." - Arthur C. Clarke

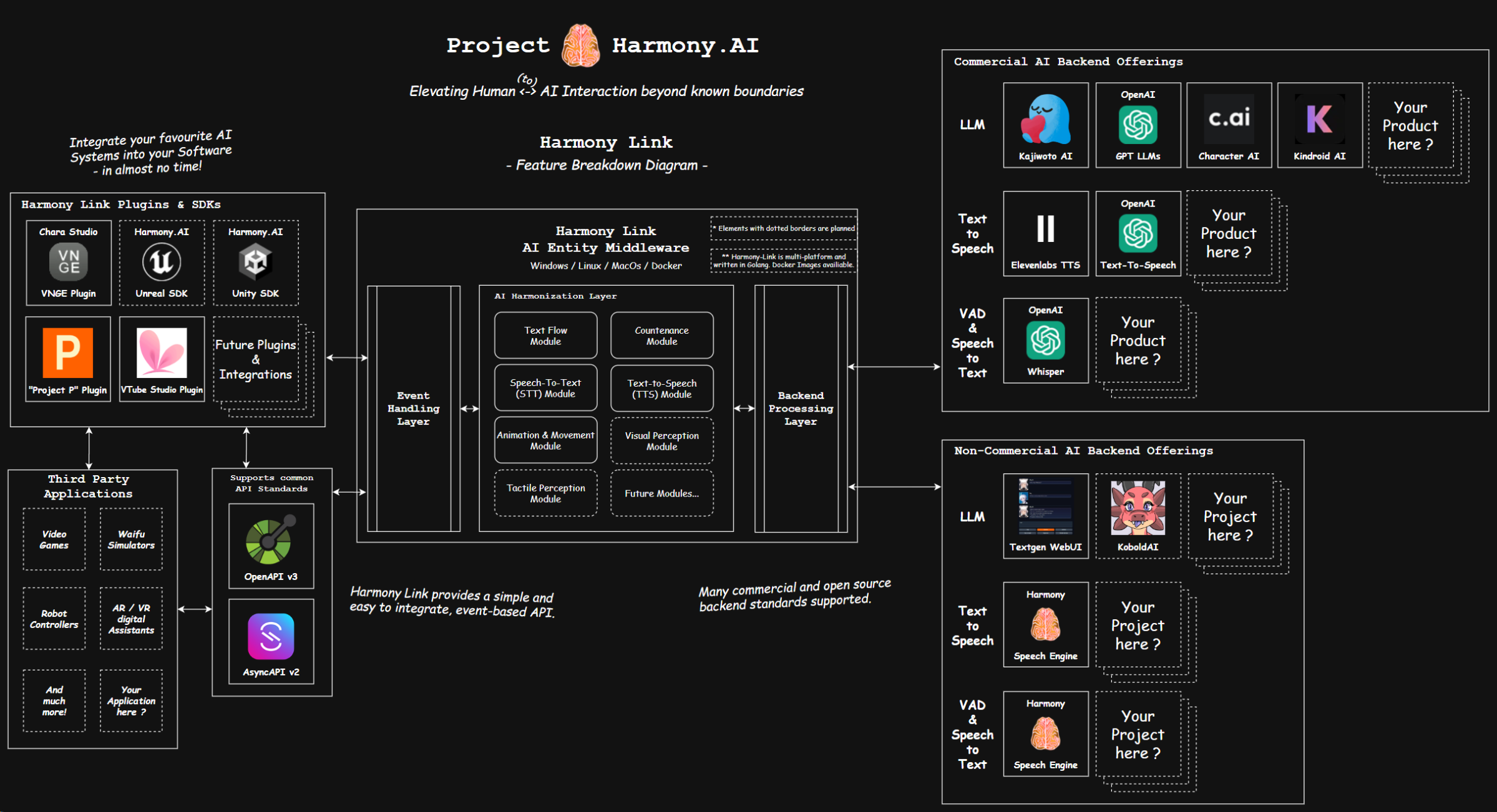

Harmony Link - AI Agent Middleware

Harmony Link is a middleware software to control AI agents and provide them with multi-modal capabilities.

It's designed to be modular, which allows for selective usage of the different modules on a per-character basis, for example, one character with static dialogues might only need to use it's TTS module, while another one might just require STT and an LLM to process or annotate the user's verbal utterances, but doesn't talk back (e.g. an animal that's being interacted with).

However, the real magic that Harmony Link does, is by connecting all these modules to work together in a multi-modal way, so they form a realistic character experience, which just needs to be applied to the character's actual avatar in the virtual environment, by using a Plugin.

Core Features:

- AI Harmonization Layer to achieve multi-modality between modules

- Support for a growing variety of already existing AI Technologies

- Unified Event System with standardized API (OpenAPI / AsyncAPI)

- Extensible Plugin System, allowing for easy Integration

- Optimized for best latency and performance

- Platform-independent (Runs on Windows, Linux, Mac and Android)

Want to test it? There's a Harmony Link Setup Tutorial on YouTube:

...and additional tutorials for Plugins & local AI Setup as well.

Check out our YouTube Channel.

Technical Documentation: Github

Available Modules and Backends:

TTS Module:

STT Module:

Countenance Module:

Movement Module:

Perception Module:

(coming soon)

Available Plugins:

ProjectP-Plugin

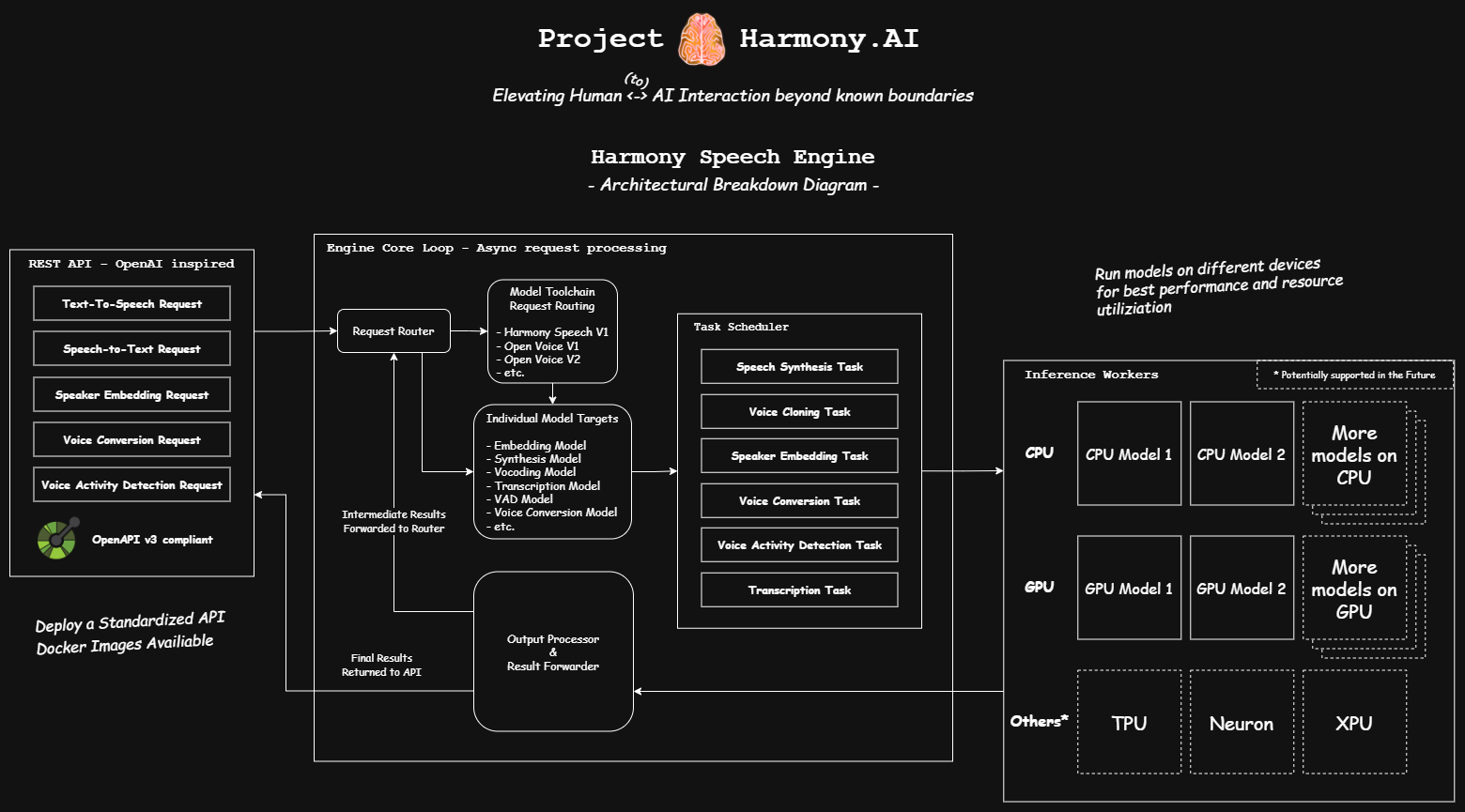

Harmony Speech Engine - AI Voice Inference Engine

Harmony Speech is a high perfomance AI Speech Engine, which allows for faster-than-realtime AI Speech Generation, 'Zero-Shot' voice cloning and Speech Recognition using State-of-the-Art open source AI voice technology.

Core Features:

- OpenAI-Style APIs for

- Text-to-Speech

- Speech-To-Text

- Voice Conversion

- Speech Embedding.

- Multi-Model & Parallel Model Processing Support.

- Toolchain-based request processing & internal re-routing between models working together.

- Extensible for adding future Open Source AI Speech models & toolchains.

- GPU & CPU Inference

- Efficient batch processing of requests

- Huggingface Integration

- Interactive UI

- Docker Support

Technical Documentation: Github

Supported Model architectures:

About our Harmony Speech V1 Model familiy

Version 1 of Harmony Speech is one of the first AI technologies which were created by Project Harmony.AI.

The goal was to achive an AI voice cloning engine, which is capable of maintaining the Speaker Identity of any voice on speech generation, and allows for faster-than-realtime voice generation, even if performed in a CPU-only environment.

As part of Harmony.AI Release Version 0.2.1, we're open sourcing these model weights under the Apache License on Huggingface.

The inference code is part of our Harmony Speech Engine codebase.

Following the recent advancements of Open Source AI Speech Technology and the lack of development capacities on our side currently, we came to the conclusion that we can best support our community by creating an inference engine with an unified API for the huge variety of Speech related AI models and toolchains, rather than training additional, custom models. Future releases of Harmony Speech Engine will yield more and better quality open source AI speech models availiable to use for speech generation.

Local Harmony.AI Quickstart Suite

With Harmony Release 0.2.1 we're introducing our local AI Quickstart suite.

The Quickstart suite contains scripts and configurations for quickly setting up Harmony AI modules for running locally.

It provides as simplistic approach using docker compose files and docker images which are being provided via docker hub. The default configuration consists of:

- Harmony Link, our agentic runtime

- Our Text-Generation Web-UI fork, a slightly modified version of Oobabooga's great repository.

- Harmony Speech Engine, our custom inference engine for AI voice generation, voice cloning and Speech transcription.

All docker Images and revision can be found at Docker-Hub.

It allows to run all parts of Harmony.AI's tech stack locally with very few setup steps needed to get going. Customized experiences of course will require some tweaking still, but we want to continously improve this suite to become more user friendly, while providing all tools and technologies needed for a safe and cost-effective local AI setup.

Check out our local AI setup tutorial on YouTube:

...and additional tutorials for Plugins & local AI Setup as well.

Check out our YouTube Channel.

Source Code & Readme: Github